The true cost of AWS

In a previous post, The True Cost of Azure, I outlined some of the pitfalls organizations face in the shift to the cloud, such as virtualization sprawl, unplanned expenditure, and cost spikes – caused by zombie VMs and lack of control. In this post, my focus has shifted to AWS services. Similar to Azure, AWS services are fantastic business enablers, but they have created a dilemma for IT and the CIO: how to stay in control of IT costs, when the business has cut down your visibility?

At one time, building the IT infrastructure and managing the software that runs on it was the sole the responsibility of the IT department. Control was centralized, cost was transparent. But the cloud has changed that.

Supported by automation and self-service, the cloud has enabled business users to spin up virtualized infrastructure and run software on it, where and when they need it. Distributing both control and cost. Leaving organizations with no insight into their cloud environments, what hardware, what software they have running on it, who in the organization has created instances, and what risks they are exposed to in terms of license compliance and security.

The cloud challenge – unleashing benefit to business users while remaining in control – is likely to become harder to overcome as cloud adoption accelerates – According to Gartner, “IT spending is forecast to grow from $3.5 trillion in 2015, to $3.9 trillion by 2020. This represents a compound annual growth rate (CAGR) of 2.0% from 2015 through 2020.

During the same period, the aggregate market for public cloud services is expected to grow at a significantly higher CAGR of 17.5% “, with IaaS set to 30.5% CAGR for the same period [1]. The challenge leaves IT in the problematic position of delivering support, managing infrastructure, ensuring compliance, managing security, and predicting cost for hardware and software that users elsewhere in the organization have commissioned, and may or may not be using.

WHAT DOES A VM COST?

Right-sizing a VM is a complex calculation relying on accurate estimates for several provisioning parameters such as: computing power, number of cores, memory, operating system, server services for say SQL or mail, and where – geographically – the VM will run. For example, AWS hourly rates for general purpose hardware running Windows, in the US East (N. Virginia) region range translate to an annual cost of up to USD 57,000. But whatever the cost, lack of control is the most significant contributor to soaring spend. The percentage of unused VMs varies, with some estimates indicating that up to 50% of your cloud network could be comatose, so even if you can right-size a VM at the outset, without proper controls for decommissioning, VM-spend tends to run amok.

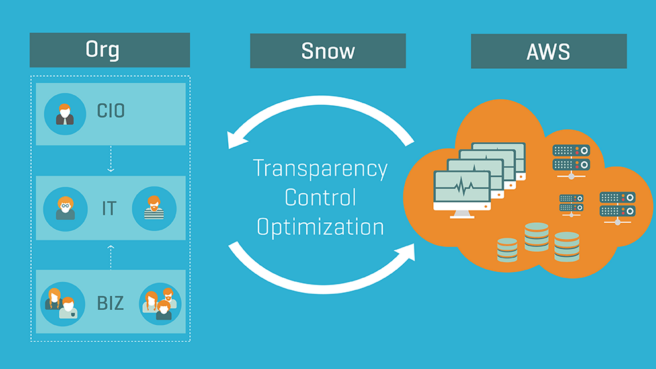

If your organization relies on cloud services like AWS, or if you are shifting toward IaaS/SaaS, gaining insight is essential for IT and the CIO, as it facilitates control and optimization. Enabling IT to regain visibility can be achieved if a few challenges can be managed:

- Right-sizing VMs

- Upfront commitment

- Controlling virtualization sprawl

- The license implications of elastic-cloud scaling

- The transition from on-premise to cloud – what software licenses can you take with you?

Provisioning, VM sprawl, and scaling can be solved with tools and automation. But, solving the issue of what software licenses you can take with you, is difficult because it varies, and it varies massively. Even if I could provide the answers, the chances are high that the licensing terms and agreements will have changed by the time you read this post. But, by paying attention to a couple of typical licensing parameters, you can ease your organization into AWS without breaching the terms of your license agreements. I’ll get back that.

RIGHT-SIZING VMs

But first, let’s discuss some of the fundamentals. To create cloud services with AWS in a cost-effective way, accurately estimating capacity is essential. But how can you do this without first running the service? The tendency is to overprovision to avoid performance issues. But remember that AWS prices roughly double between instance levels (as the rightmost column in Figure 1 illustrates). So, knowing your usage pattern over time is a vital piece of insight that enables optimization.

Figure 1: Example on-demand AWS prices (December 2016)

UPFRONT COMMITMENT

The default behavior of spinning up resources on demand may be easier in the short term, but you can achieve considerable savings if you can make an upfront commitment. According to Amazon, you can save up to 75% for reserved instances (RIs) compared with on-demand prices – depending on how much upfront investment you can commit and for how long you intend to use AWS services. Reserved Instances are ideal for static services, because you pay irrespective of usage.

Any usage exceeding the SLA for the RI automatically flips to the on-demand rate. Adoption of RIs is relatively low compared with on-demand usage, as customers are concerned about the need to make changes to adapt to fluctuating business need. However, provisioning steady services on RIs will bring instant cost benefit. Convertible RI plans allow you to change provisioning parameters, but for those that are fixed, Amazon provides a marketplace to sell unused capacity.

SOFTWARE LICENSES IN THE CLOUD

So, getting back to the issue of software licensing in the cloud. Let’s say you have made the decision to shift to a cloud based infrastructure. You’ve chosen AWS for your compute, storage, networking, and memory needs. But what about your software? Can you use your existing licenses (BYOL)? In some cases, software publishers will allow you to run your software using a cloud solution like AWS with your on-premise license for a given time.

Other license agreements will dictate that you run your software on dedicated AWS services. In other cases, you may have no option but to write off your perpetual licenses and switch to subscription, however, most publishers will allow you to convert your perpetual licenses into subscription-based ones, and will even offer good deals when they are trying to encourage customers to make the shift.

THE SCALING DILEMMA

Let’s say you are running an ERP application on your VMs, which for performance reasons, is set up to scales – spin up more VMs – to cope with demand at month/quarter end. If the software is licensed on a per core basis, and VMs are created to handle load peaks, then the number of licenses you need to run the software in a compliant manner will increase according the number of VMs that are spun. But you have no control over this, except to completely ban any application running on an elastic model – which sort of defeats the purpose of using a cloud model. And you won’t find out about it until it’s too late, when you’ve got an audit on your hands. Well that’s perhaps not strictly true. The data is there, you just need to be able to find it.

VM SPRAWL IS EATING YOUR CASH

One of the benefits of cloud computing – the ease of spinning up infrastructure at the point of need – is unfortunately one of its drawbacks. The ability to spin up a VM in an instant, often leads to over-dimensioning (too much CPU, too much storage, networking capacity, and memory) and once the business need has been satisfied, VMs are often forgotten, yet they are still eating up your cash.

Just one medium-sized AWS virtual machine – t2.medium – will cost around USD 50 per month. Think about the scenario where hundreds of machines like this one, or worse still high-performing instances are lying idle.

ENABLING OPTIMIZATION

Looking back at the ERP example. The IT manager, CIO, and CFO are all in agreement that running this software on AWS make sense as it provides the maximum productivity and flexibility given the organization’s current needs. But how do you enable optimization as needs change? What these IT stakeholders need is insight. To enable right-sizing of the underlying AWS infrastructure, insight is needed about who in the organization uses the software and how much it used.

Snow can provide this insight.

Snow Automation Platform includes out-of-the-box functionality for AWS optimization to automate the process of spinning up virtual resources, providing users with the power of the cloud, but with the vital addition of control. By ensuring that resources are ordered with a decommissioning date, which can be extended, zombie VMs will become extinct. Educational environments, for example, can be removed once training is complete, and development VMs can be turned off at night and during the weekends. By including approval steps, or automatic budget limits for example, the risks of overspend and over-dimensioning drop dramatically. By ensuring population of AWS tags (such as cost center, price, and user) in the ordering process, insight into who in the business is responsible for virtualized instances is improved.

Snow Inventory tracks usage of software – even if it is running on AWS. Usage information enables IT to reassign unused licenses to other users, and down/upsize when software is up for renewal. But usage information is more significant for software running on AWS because unused software is not simply wasting licenses it is running up infrastructure costs unnecessarily. Together with compliance information delivered by Snow License Manager, Snow can enable your enterprise to build a flexible IT infrastructure, unleashing the full power of the cloud into the organization, maximizing productivity and improving user satisfaction, while assuring software license compliance. At the same time, Snow provides IT stakeholders with an efficient way to gain the right level of insight of the entire IT estate, securing cost optimization, internal billing, and accurate IT forecasts.

Are you having difficulties unleashing the full power of your AWS services? Contact Snow Software today, we can help you stay compliant, and optimize spend as you transition.

References:

- Gartner, May 2016, Market Insight: Cloud Shift — The Transition of IT Spending From Traditional Systems to Cloud, available at: https://www.gartner.com/doc/3321217/market-insight-cloud-shift-