Considering AI? Snow Leadership Suggests Cautious Experimentation

We’ve seen emerging technologies come onto the scene since the dawn of the internet. Websites. Email. Cloud computing. Faster cloud computing. IoT. Generative AI is no different from these evolutions…except maybe it is. AI technology powers a more direct connection for people to speak to technology. The opportunities are endless, but the potential risks are very real too.

I recently sat down with two of our executives, Steve Tait, Chief Technology Officer and EVP, R&D and Alastair Pooley, Chief Information Officer to discuss AI, why it has captured the world’s interest, how organizations can benefit from its potential and how to navigate the risk of this next biggest development.

Q&A with Snow

Q: As a member of the Snow Software executive team, what are your thoughts on generative AI and the impact the new technology is having on the business landscape?

Steve: The launch of generative AI has been nothing short of amazing. The industry has been looking at AI and large language models and it has been developing slowly over the last couple of years. Then in December, ChatGPT-4 is announced, and it feels like the world transformed overnight. The possibilities it delivers, the accelerated power of this technology…it’s now available to all of us and everyone can see the immediate benefits.

Al: It’s hugely exciting. There’s obviously a lot of interest in how it can make people more productive and enhance what they are able to do.

Q: When considering if AI should be brought into their own organization, where do you start?

Steve: Every organization is different and every company’s appetite for risk varies. For example, banks and healthcare organizations handle very sensitive customer information, and regulations require it to be handled in a very specific way. But if, for example, you’re a startup selling software, you may not have those same levels of responsibility. Regardless of your ability and willingness to try new technology, everyone should take a methodical, risk-based approach.

Al: Many organizations are taking the approach of ‘cautious experimentation’. You want to learn about it, try it out and even adopt it, but it’s critical that you do so in a safe way. How can you learn about it and gain the benefits without running into real problems?

Q: How has Snow brought AI into our organization? Can you describe the process?

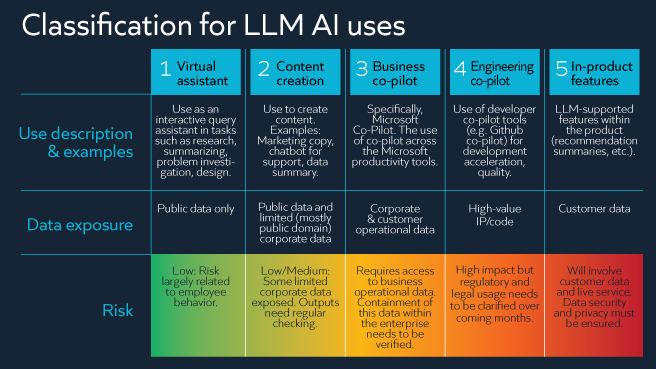

Steve: We started by breaking down different possible AI use cases, exploring where might we use this technology and gain value. One of our use cases includes using a virtual assistant for finding information, which is relatively low-risk and likely produces good results. We also created one for content creation and one for business copilot technologies – each with slightly more risk but also their own rewards. Other use cases we’ve developed include engineering copilot technologies, where we’re looking at building source code with AI. And finally, an in-product use case.

We’ve also created a group within Snow called Snow Labs. They work in partnership with our customers to explore how new and cutting-edge technologies might be used for our products. They are a highly trained team with respect to what should be shared with various models and what shouldn’t. So, we’re blending innovation with caution.

Q: What are some of the risks being discussed at Snow and concerns that you’ve heard Snow customers express?

Al: When it comes to risk, there are many areas to consider. Think about your organization’s reputation. If you’re using AI to generate content, are you checking accuracy and quality? Sub-standard material can impact your corporate image. Copyright infringement can be both a brand reputation issue and a legal one and copyright lawsuits are already underway.

Exposing intellectual property is another risk – Samsung is the perfect example. The company banned employee use on company devices after sensitive company data was found to have been uploaded to ChatGPT. They were inadvertently publishing corporate, intellectual property by copying and pasting it into prompts.

The regulatory and legal landscapes are other important risk considerations. Data privacy laws like GDPR must be adhered to and what does that mean for public generative AI models? Hiring is another issue companies are talking about. AI tools have been known to bring bias into the process, so that’s something to watch for.

Q: How do you navigate all these risks?

Steve: How we look at AI and make it real at Snow includes setting acceptable use policies. This means we define the tools our users are okay to use. There’s something like 60 different AI models being published each month and it’s growing every day. Defining and communicating a policy on what can be used is key.

Acceptable use policies aren’t effective though without regularly monitoring what’s happening on your network. We use our own software for this – with an initiative called Snow Using Snow. We have a specific recognition algorithm for AI within Snow SaaS Management, so we can look at what’s being used, catalog the usage, and report against which tools are being used by which user. This helps us keep an eye on whether we are adhering to the acceptable use policies.

Al: If you don’t have a software asset management or SaaS management platform that can help discover AI, your other option would be to look at your web control technology. This would help track browser usage, but with increasingly hybrid work environments, it’s obviously a lot more difficult to manage, but could at least offer you a starting point.

Q: Any final thoughts?

Steve: Despite many of the potential risks, if well navigated, the opportunities of generative AI are tremendous.

Al: It’s important to remember that despite your best efforts at limiting or banning the technology, AI is going to be used by your employees. It may very well already be in use at your organization and you are unaware of it. Be proactive, start looking at the approach like you would with any other emerging technology. Assess it. Put some controls, policies and guidelines in place and make sure you’re talking to your users.

Getting a handle on AI usage

Perspective is everything in today’s ever-changing tech landscape and you simply can’t monitor what you can’t see. Now, more than ever, IT teams and business leaders need end-to-end visibility across their ecosystems so they can minimize risk and keep their organizations secure.

If you’re interested in taking the first step, and creating an AI Acceptable Use Policy, we’ve provided a template for free download. If you’re past policies and ready for data on AI use, request a demo now.